International Conference on Mathematical and Computational Linguistics for Proofs

The videos of the talks are available on Youtube.

For more photos, scroll down to the end of the page.

This conference is part of the EuroProofNet Symposium. See the symposium page to get information on the other co-located events.

Date: 15-18 September 2025

Venue: Institut Pascal, 530 Rue André Rivière, 91400 Orsay [access] [hotels] [food]

Organizers: Roussanka Loukanova (rloukanova - gmail) and Axel Ljungström (axel.ljungstrom - gmail)

Registrations are closed

Talk proposals and updates by authors: instructions, procedure

Submission and Updates by authors of titles and abstracts of their talks and demos: https://easychair.org/conferences?conf=mclp2025

Invited speakers: Listed Below

Program (tentative) Abstracts are given after the schedule.

Please note that you have to organize yourself for lunches (and dinners), but there are many options around.

Monday, Sep 15 2025

| 09:00-10:00 | Reinhard Muskens, University of Amsterdam | Linguistic Interpretation—from Left to Right (KeyNote) |

| 10:00-10:30 | S | T |

| 10:30-11:00 | break | break |

| 11:00-12:00 | Stephan Schulz, DHBW Stuttgart | LLM+ vs. Logic+ The Future of the AI Math Ecosystem (KeyNote) |

| 12:00-12:30 | S | T |

| 12:30-14:00 | lunch | lunch |

| 14:00-15:00 | Aarne Ranta, Chalmers University of Technology and University of Gothenburg | Symbolic Informalization: Fluent, Productive, Multilingual (Invited) (slides) |

| 15:00-15:30 | S | T |

| 15:30-16:00 | break | break |

| 16:00-17:00 | Krasimir Angelov, Chalmers University of Technology and University of Gothenburg | Multilingual Natural Language Generation on a Large Scale (Invited) (slides) |

| 17:00-17:30 | S | T |

| 17:30-18:30 | (*) happy hour |

Tuesday, Sep 16 2025

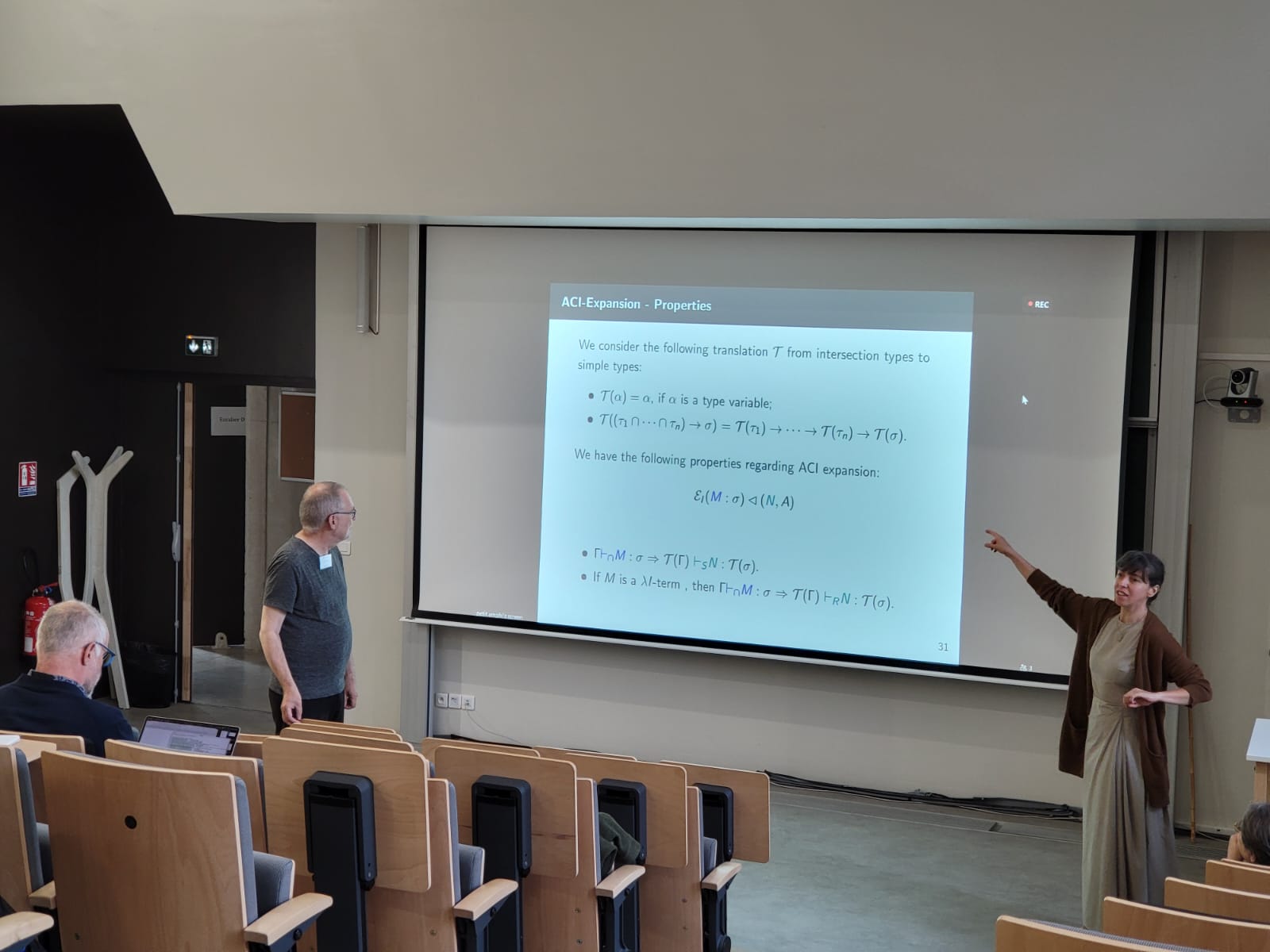

| 09:00-10:00 | Sandra Alves, University of Porto | Structural Rules and Algebraic Properties of Intersection Types (Invited) (slides) |

| 10:00-10:30 | S | T |

| 10:30-11:00 | break | break |

| 11:00-12:00 | Roussanka Loukanova, Bulgarian Academy of Sciences | Pure versus Generalised Quantifiers (slides) |

| 12:00-12:30 | S | T |

| 12:30-14:00 | lunch | lunch |

| 14:00-15:00 | Daisuke Bekki, Ochanomizu University | A Proof-theoretic Investigation of Natural Language Semantics (Invited) (slides) |

| 15:00-15:30 | Denis Bechet, LS2N - Nantes University and Annie Foret, IRISA and Univ Rennes 1 | Categorial Dependency Grammars Extended with Typed Barriers (slides) |

| 15:30-16:00 | break | break |

| 16:00-17:00 | Wojciech Buszkowski, Adam Mickiewicz University | Sequent systems for Lambek calculi and their extensions: a survey of recent results (Invited) (slides) |

| 17:00-18:00 | Philippe de Groote, Inria Nancy - Grand Est, France | Some observations about plurals in mathematics (Invited) (slides) |

| 18:00-18:30 | S | T |

Wednesday, Sep 17 2025

| 09:00-10:00 | Adrian De Lon, University of Bonn, Germany | Natural Language Understanding In Natural Proof Checking (Invited) (slides) |

| 10:00-10:30 | S | T |

| 10:30-11:00 | break | break |

| 11:00-11:30 | Shashank Pathak, The University of Manchester | Extending Flexible Boolean Semantics for the Language of Mathematics (slides) |

| 11:30-12:30 | Michael Kohlhase, Computer Science, FAU Erlangen-Nürnberg, Germany | Active Flexiformal Mathematics (in particular Proofs) - Methods, Resources, and Applications (Invited) (slides) |

| 12:30-14:00 | lunch | lunch |

| 14:00-15:00 | Andrija Urosevic and Sana Stojanovic-Durdevic, University of Belgrade, Faculty of Mathematics | From Formal Natural Deduction Proofs to LaTeX Proof Trees (slides) |

| 15:00-15:30 | S | T |

| 15:30-16:00 | break | break |

| 16:00-17:00 | Sana Stojanovic-Durdevic and Danijela Simic, University of Belgrade, Faculty of Mathematics | Proof by Cases in Euclidean Geometry (slides) |

| 17:00-18:00 | Jørgen Villadsen, Technical University of Denmark, Denmark | On Natural Deduction and Axioms for Propositional and First-Order Logic (Invited) (slides) |

| 18:00-18:30 | S | T |

Thursday, Sep 18 2025; Joint Sessions from 11:00, MCLP and WG3

| 09:00-09:30 | Barba da Costa, MIT, US, at al. | Disambiguating Natural Language Proofs with Bayesian Inference (slides) |

| 09:30-10:00 | Fabian Zaiser, MIT, US, at al. | Automatic (In)formalization of Mathematics via Language-Model Probabilistic Programming and Cycle Consistency (slides) |

| 10:00-10:30 | Georgios V. Pitsiladis and Petros Stefaneas, National Technical University of Athens | Using the Theory of Institutions for Semiotic–Argumentational Treatment of Mathematical Proof (slides) |

| 10:30-11:00 | break | break |

| 11:00-12:00 | Frédéric Tran Minh, UGA-Grenoble INP-Esisar, Laure Gonnord, Grenoble-INP / LCIS, and Julien Narboux, RIF - Université Paris Cité | A Lean-based Language for Teaching Proof in High-school (slides) |

| 12:00-12:30 | S | T |

| 12:30-14:00 | lunch | lunch |

| 14:00-14:45 | Adrian Riesco and Juan Rodriguez-Hortala, Universidad Complutense de Madrid | Singular and Plural Functions (Invited) (slides) |

| 14:45-15:30 | Roussanka Loukanova, Bulgarian Academy of Sciences | Parametric Information via Type Theory of Acyclic Algorithms (slides) |

| 15:30-16:00 | break | break |

| 16:00-17:00 | Lawrence S. Moss, Indiana University, Bloomington, US | Natural Logic: Proof Systems for Reasoning in Natural Language (KeyNote) (slides) |

| 17:00-17:30 | S | T |

| 17:30-18:30 | (*) happy hour |

Speakers and Abstracts

- Reinhard Muskens, University of Amsterdam, The Netherlands

Linguistic Interpretation—from Left to Right (KeyNote)

Abstract: In this talk I will show how it is possible to model the interpretation of ordinary language “from left to right’’, following the linguistic precedence order, that is. Given the expected audience, I will focus on explaining some of the central aims of linguistic semantics and on showcasing how logical techniques can play a pivotal role in linguistic theory formation.

- Stephan Schulz, DHBW Stuttgart, Germany

LLM+ vs. Logic+ The Future of the AI Math Ecosystem (KeyNote)

Abstract: We compare and contrast an LLM+ and a Logic+ architecture for future AI-based mathematical tools. We argue that the underlying knowledge representation of the computerized mathematics ecosystem must be based on formal logic, not on distributed weights modelling probabilities, and that hence the Logic+ approach is technically more viable.

In a Logic+ architecture, theorem provers maintain the integrity of mathematical knowledge both by consistency checking and by ensuring sound derivation for new results. However, other AI techniques, including LLMs, can help, e.g., in auto-formalisation of mathematical knowledge, in search control, and in the hypothesis generation part of automatic theory exploration.

- Lawrence S. Moss, Indiana University, Bloomington, United States

joint sessions: MCLP + WG3

Natural Logic: Proof Systems for Reasoning in Natural Language (KeyNote)

Abstract: This talk has two goals. The first is to give a very general introduction to the program of “natural logic’’ (Sanchez-Valencia. 1991). The overall idea is to do logic in natural language as much as possible, ideally without translation into standard logical systems like first-order logic. There is a large and growing family of such logical systems, together with soundness/completeness theorems and in some cases with implementations. The main difference between the proof systems here and more familiar ones is that we start with the simplest systems in logic, syllogistic systems and their extensions, and then slowly incorporate more grammatical features.

The other goal of the talk is to connect with people in the EuroProofNet area, especially people interested in the MCLP Conference and perhaps also with the Workshop on Program Verification. I will talk about some relatively new work (joint with Antonio Badia) on connections of natural logic and description logic, and also on ongoing work on running systems for natural language inference which have as a component the logical systems from this area.

- Daisuke Bekki, Ochanomizu University, Japan

A Proof-theoretic Investigation of Natural Language Semantics (Invited Talk)

Abstract: Type-theoretical semantics (TTS) offers a proof-theoretic alternative to model-theoretic semantics, using dependent types for meaning representation. Dependent type semantics (DTS), a form of TTS, employs unspecified types (@-types) to compositionally analyze anaphora and presuppositions, serving as a proof-theoretic counterpart to dynamic semantics. DTS can explain certain anaphoric phenomena other frameworks cannot, highlighting the importance of “types depending on proofs” in natural language semantics. The talk will overview DTS and demonstrate its application to linguistic phenomena like anaphora resolution, presupposition binding, and inference within a unified framework, emphasizing proof theory’s fundamental role in meaning.

- Aarne Ranta, Chalmers University of Technology and University of Gothenburg, Sweden

Symbolic Informalization: Fluent, Productive, Multilingual (Invited Talk)

Abstract: Symbolic informalization enables a reliable conversion of formal mathematics to natural language. It can also be used for creating training data for autoformalization. This paper outlines the project Informath, which aims to show how symbolic informalization can produce text with natural variation, be developed with a reasonable effort, and address multiple formal and natural languages simultaneously.

- Krasimir Angelov, Chalmers University of Technology and University of Gothenburg, Sweden

Multilingual Natural Language Generation on a Large Scale (Invited Talk)

Abstract: Natural Language Generation has been studied predominantly within a single language, most often English. In this talk we will see that this does not have to be the case. Moreover, the generation can span wide domains and be deployed on a large scale. This is made possible by the semi-automatic creation of multilingual resources for over 200 languages.

- Adrian De Lon, University of Bonn, Germany

Natural Language Understanding In Natural Proof Checking (Invited Talk)

Abstract: The Naproche project aims to model informal mathematical English and facilitate natural mathematical proof checking through the implementation of the Naproche interactive theorem prover. In this talk, I will present the syntax and semantics of Naproche-ZF and discuss the processing techniques employed in the theorem proving software.

- Denis Bechet, LS2N - Nantes University, France and Annie Foret, IRISA and Univ Rennes 1, France

Categorial Dependency Grammars extended with typed barriers (Invited Talk)

Abstract: We consider the family of Categorial Dependency Grammars (CDG),as computational grammars for language processing. CDG are a class of categorial grammars defining dependency structures. The formalism can be viewed as a formal proof system on CDG types, with a finite set of rules, combining the classical categorial grammars’ elimination rules with valency pairing rules that are able to definenon-projective (discontinuous) dependencies.

A CDG lexicon attaches types to words. A sentence is said correct when a sequence of types attached to its words entails a particular type, the proof derivation then reflects the sentence dependency structure.

This article is motivated by a need to limit the scope of non-projective dependencies. A previous proposal extended CDG with a single barrier to give an AFL (Abstract Family of Languages). In this article we introduce typed barriers. Typed barriers should be more convenient to model some fine-grained linguistic phenomenon in a simple andmodular way, while preserving good theoretical properties. This proposal allows to prevent some undesired analysis and also may guide the parsing more efficiently. We provide a new type language and rule system that generalize previous CDG formula-tions and consider this new system in terms of expressivity, parsing and efficiency.

- Shashank Pathak, The University of Manchester, United Kingdom

Extending Flexible Boolean Semantics for the Language of Mathematics

Abstract: In this talk, we discuss how the linguistic phenomena of coordination, definite descriptions, distributive and collective predicates, and selectional restrictions give rise to different entailment patterns in the Language of Mathematics (LoM). LoM refers to the English prose and symbolic expressions found in pure mathematics textbooks and research articles.

We use flexible Boolean semantics as our semantic framework. Boolean semantics is a branch of compositional model-theoretic semantics that leverages Boolean algebraic properties of semantic domains to analyse natural language. While standard Boolean semantics typically relies solely on function application to combine denotations, flexible Boolean semantics extends it by employing phonologically covert operators as well.

Winter applies flexible Boolean semantics to analyse how coordination and definite descriptions interact with distributive and collective predicates in English. In this talk, we evaluate his framework using data from LoM. The lack of vagueness in LoM makes it an ideal testbed for semantic theories. Concerning applications, developing semantic theories for LoM can support the construction of mathematical formalisation tools. We first present an example from LoM where Winter’s theory fails to yield satisfactory results. We then propose extensions to the type system and operators, and demonstrate how these modifications address the issue.

- Frédéric Tran Minh, UGA-Grenoble INP-Esisar, France; Laure Gonnord, Grenoble-INP / LCIS, France; and Julien Narboux, RIF - Université Paris Cité, France

joint sessions: MCLP + WG3

A Lean-based Language for Teaching Proof in High-school

Abstract: Now pervasive in many mathematics and computer science research domains, proof assistants have recently gained importance in education, mostly during the college years. In this talk, we propose a new learning environment as a layer above the Lean proof assistant, specially targeting high-school level proofs. Inspired by other adaptations of proof assistants for teaching such as Lean-Verbose, Coq Waterproof and Proof Buddy, and also by coherent logic, we designed Yalep, a declarative controlled natural language, with a minimal number of syntactic constructions, that favors forward-chaining of facts. Yalep provides convenience for type theory hiding, and functions defined on type subsets. This paper presents the design choices and implementation of these features.

- Adrian Riesco, Universidad Complutense de Madrid and Juan Rodriguez-Hortala

joint sessions: MCLP + WG3

Singular and Plural Functions (Invited Talk)

Abstract: Modern functional logic programming languages use non-terminating and non-confluent constructor systems as programs in order to define non-strict and non-deterministic functions. Two semantic alternatives have been usually considered for parameter passing with this kind of functions: call-time choice and run-time choice. While the former is the standard choice of modern FLP languages, the latter lacks some basic properties – mainly compositionality – that have prevented its use in practical FLP systems. Traditionally it has been considered that call-time choice induces a singular denotational semantics, while run-time choice induces a plural semantics. We have discovered that this latter identification is wrong when pattern matching is involved, and thus in this paper we propose two novel compositional plural semantics for CSs that are different from run-time choice.

- Sandra Alves, University of Porto

Structural Rules and Algebraic Properties of Intersection Types (Invited Talk)

Abstract: In this talk we define several notions of term expansion, used to define terms with less sharing, but with the same computational properties of terms typable in an intersection type system. Expansion relates terms typed by associative, commutative and idempotent intersections with terms typed in the Curry type system and the relevant type system; terms typed by non-idempotent intersections with terms typed in the affine and linear type systems; and terms typed by non-idempotent and non-commutative intersections with terms typed in an ordered type system.

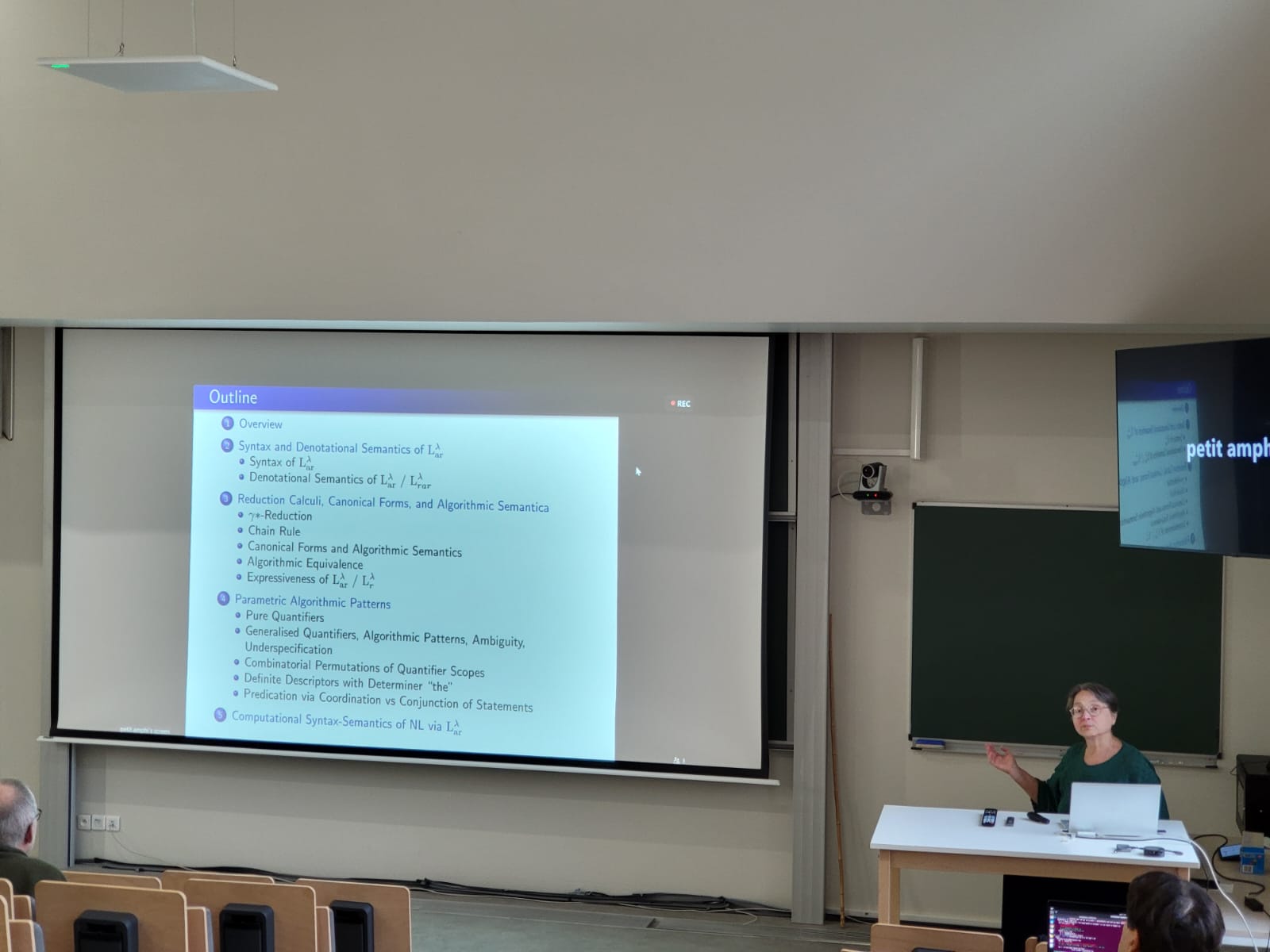

- Roussanka Loukanova, Bulgarian Academy of Sciences (BAS), Bulgaria

Pure versus Generalised Quantifiers

Abstract: In my talk, I will present several approaches to representing quantificational sentences of natural language (NL) by formal languages of logic. I will focus on computational syntax-semantics of domain-dependent fragments of NL of mathematics, covering mathematical descriptions, definitions, statements, and proofs, by translations between NL expressions and formal languages of logic.

In the major part of the talk, I will introduce a development of Moschovakis (2006) type-theory of recursion (TTR) and algorithms (TTA), their versions, formal languages, and reduction calculi. Adding a criterion for termination, by the Acyclicity Constraint, limits TTR and TTA to type-theory of acyclic recursion (TTAR) and acyclic algorithms (TTAA). TTR has two layers of computational semantics: algorithmic and denotational semantics. The canonical form of each algorithmically meaningful term determines the algorithm for computing its denotation. TTR provides special algorithmic and semantic adequateness to computational syntax-semantics of formal and natural languages.

TTR is a proper, strict extension of the language LCF introduced by Gordon Plotkin (1977). TTR provides an algorithmic generalisation of the let-operator, for the let-expressions originally introduced by Dana S. Scott (1993). Moschovakis (2006) and Loukanova (2023, 2024) prove that TTR is a proper, strict extension of Gallin TY2. Thus, TTR is a strict extension of Montague Intensional Logic (MIL), via Gallin (1975) interpretation of MIL into TY2. In TTR, there are recursion terms that are neither algorithmically nor otherwise semantically equivalent to any lambda-terms.

In addition to algorithmic semantics, TTR provides more subtle semantic interpretations and distinctions for formal and natural languages. Essentially, TTR supports rich varieties of quantification, over all types, via terms of:

(1) generalised quantifiers (Loukanova, 2016-2025)

(2) pure logic quantifiers (Loukanova, 2019-2025)

The choice between various terms depends on the computational grammars of NL and the associated syntax-semantics analyses of NL expressions in the respective grammars. Typically, the proof and verification systems use pure quantifiers. I will introduce rules for translations between pure logic quantifiers and corresponding generalised ones, at the object level of TTR.

The implementation of translators between NL expressions and formal languages of proof and verification systems is productive work, which is open, promising, and provides reliability. The algorithmic characteristics of TTAR and its semantic expressiveness make it valuable for computational syntax-semantics interfaces in computational grammars of NL, and, especially for computational transfers between NL of mathematics and systems of proof and verification.

- Jørgen Villadsen, Technical University of Denmark, Copenhagen, Denmark

On Natural Deduction and Axioms for Propositional and First-Order Logic (Invited Talk)

Abstract: We present a concise formalization in the proof assistant Isabelle/HOL of classical propositional and first-order logic, based on natural deduction and axioms, and where the completeness theorem hold for languages of arbitrary cardinalities.

- Andrija Urosevic and Sana Stojanovic-Durdevic, University of Belgrade, Faculty of Mathematics, Serbia

From Formal Natural Deduction Proofs to LaTeX Proof Trees

Abstract: Writing formal proofs in first-order logic (FOL) is relatively straightforward, but there are cases where the proving process is far from trivial. Moreover, interpreting and understanding these formal proofs is not trivial and can be very time consuming. In this paper, we try to solve this problem by automatically generating LaTeX proof trees for formal natural deduction proofs of FOL formulas. First, we introduce the proof assistant Theodore, which is used to prove FOL formulas, and then present a method for generating reliable LaTeX proof trees from proofs obtained with the proof assistant Theodore.

- Sana Stojanovic-Durdevic and Danijela Simic, University of Belgrade, Faculty of Mathematics, Serbia

Proof by Cases in Euclidean Geometry

Abstract: We recognize the advances in the field of automated theorem provers (ATP) and interactive theorem provers (ITP) leading to a great contribution in all areas of mathematics and computer science. Therefore, we wanted to use automated tools for proof construction and verification, with emphasis on the value of “humanly understandable and checkable proofs”. To this end, we formulated a semi-formal language in coherent logic, close to the language of geometry proofs, extended by “proofs by cases” to preserve and verify the original proof form.

- Georgios V. Pitsiladis and Petros Stefaneas, National Technical University of Athens, Greece

Using the Theory of Institutions for Semiotic–Argumentational Treatment of Mathematical Proof

Abstract: Proof events have been introduced by J. Goguen, as a means to semiotically study how mathematical proofs are built in practice, and with the aim to serve as a substrate for generic proof assistants that should incorporate non-formal elements of mathematical proving. In short, proof attempts consist of a sequence of events unfolding in time, wherein some entities try to argue (possibly partially and/or non-formally and/or non-correctly) for a given claim and some entities validate the arguments. The notion has been further developed in the work of P. Stefaneas and I. Vandoulakis, where a simple formalisation of proof events is sketched. Later, APEC (by S. Almpani, P. Stefaneas, and I. Vandoulakis) has taken a more formal, but less abstract, approach in the context of argumentation theory.

The theory of institutions is an abstract theory, grounded on category theory, that describes logical systems as structures where models are related to some syntax via a satisfaction relation; this is optionally complemented by a proof system. Institutions can also formally describe translations between logics. Thus, institutions are suitable for studying elements of (the meaning of) proof events independently of the logic underlying every such event.

In the work to be presented, we first created an abstract and comprehensive formalisation of the notion of a mathematical problem, based on a formal institutional definition. The central idea is that every mathematical problem consists of four elements: a selection of an institution, a signature in the said institution, a set of sentences in the signature acting as premises, as well as sentence in the same signature acting as the claim to be proved.

We have also sketched a range of proving (proof and disproof) objects that can incorporate proof attempts of varying levels of formality. We were then able to (re)define proving events (as tuples consisting of a problem, a proving object, and other elements logging which agents paricipated, when the event happened, and what its outcome was) and hypergraphs (dubbed fluents) of related proving events. This substrate enables modelling proof attempts that may span multiple logical systems, while also being able to study their semantic relationships.

Following this semantic viewpoint, we have also viewed problems and proving events argumentationally, focusing on how relations of support and attack can be defined in our framework, how support/attack may be deduced from the structure of problems and proving events, and how (joint) support can be thought of as a description of the notion of subproblem.

- Fabian Zaiser, Massachusetts Institute of Technology, United States; [Mauricio Barba], Massachusetts Institute of Technology, United States; Katherine M. Collins, University of Cambridge, United Kingdom; Joshua Tenenbaum, Massachusetts Institute of Technology, United States; Timothy O’Donnell, McGill University; Mila – Québec AI Institute, Canada; Alexander K. Lew, Massachusetts Institute of Technology; Yale University, United States; [Vikash K. Mansinghka], Massachusetts Institute of Technology; Yale University, United States; Cameron Freer, Massachusetts Institute of Technology; Yale University, United States

Automatic (In)formalization of Mathematics via Language-Model Probabilistic Programming and Cycle Consistency

Abstract: Automatic translation between natural-language mathematics and formal text for interactive theorem provers often uses large language models (LLMs), but they can be unreliable, producing syntax or type errors. Such issues can be mitigated by controlled generation, where LLMs are steered by hard constraints (e.g., syntactic validity, type checking) or soft signals (e.g., critique by another LLM). Recent approaches frame controlled generation as probabilistic inference, conditioning LLM outputs on these constraints. Language-model probabilistic programming can perform Bayesian inference using sequential Monte Carlo (SMC) to generate asymptotically correct samples under diverse constraints.

We use language-model probabilistic programming for auto(in)formalization, steering an LLM using feedback (such as syntax/type errors and tactic failures) from Lean, along with constraints to exclude trivial or obviously false statements (via counterexample generation). We also combine formalization and informalization via a cycle-consistency constraint. These techniques allow us to increase formalization accuracy and decrease costs by leveling up smaller models.

Cycle consistency prefers formalizations whose informalizations are close to the original. Step (a) samples from the distribution of an LLM (DeepSeek-R1-Distill-Llama-8B) which is prompted to formalize the input (optionally using controlled generation). Step (b) scores the formalizations according to the log-probability that the LLM generates the original input text when it is prompted to informalize the given formalization. Inaccurate formalizations (like the third, which uses “≥ 0” instead of “> 0”) rank lower.

- Mauricio Barba da Costa, Massachusetts Institute of Technology, United States; Katherine M. Collins, University of Cambridge, United Kingdom; Fabian Zaiser, Massachusetts Institute of Technology; Alexander K. Lew, Massachusetts Institute of Technology; Yale University, United States; Vikash K. Mansinghka, Massachusetts Institute of Technology; Yale University, United States; Timothy O’Donnell, McGill University; Mila – Québec AI Institute, Canada; Joshua Tenenbaum, Massachusetts Institute of Technology, United States; Cameron Freer, Massachusetts Institute of Technology; Yale University, United States

Disambiguating Natural Language Proofs with Bayesian Inference

Abstract: Automatically formalizing mathematical statements allows mathematicians to take messy, informal proofs and verify that they are correct using interactive theorem provers. However, the success of formalization hinges on it appropriately capturing the mathematicians’ intent in their informal proof. Inferring the intended meaning of proof statements is non-trivial. Informal proofs are replete with ambiguity, as mathematicians often skip proof details, do not specify the types of variables, or omit underlying hypotheses.

Ambiguous informal mathematical statements admit multiple possible formalizations (shown as Lean fragments). Ambiguity can arise from uncertain variable domains (left), pronoun referents (center), or order of quantifiers (right). We score each formalization according to multiple signals (including computational models of human cognition, mathematics domain knowledge, and type-checking/validity checks from Lean) in order to contextually ground symbols and types based on surrounding text and usage.

Bayesian approaches can be effective at disambiguation, incorporating both domain knowledge and intuitive plausibility. We perform Bayesian inference via language model probabilistic programming to choose among multiple plausible formalizations, using sequential Monte Carlo (SMC) to sample from an LLM constrained by hard syntactic requirements and soft preferences over the likely intentions of a human mathematician. These constraints allow us to disambiguate texts by balancing cognitive plausibility, context, syntactic parsing, and mathematical correctness.

- Wojciech Buszkowski, Adam Mickiewicz University, Poznan, Poland

Sequent systems for Lambek calculi and their extensions: a survey of recent results (Invited Talk)

Abstract: This talk presents sequent systems for different extensions of Lambek calculi L and NL (non-associative L). We focus on two important proof-theoretic properties of these systems: cut elimination and interpolation. We survey the cut-elimination theorems for several systems, also enriched with non-logical axioms (assumptions). Interpolation for non-associative logics is more efficient than for associative logics: the interpolant is a subformula of some formula appearing in the provable sequent or in an assumption. We show some consequences of these properties, which are interesting for computational linguistics.

- Michael Kohlhase, Computer Science, FAU Erlangen-Nürnber, Germany

Active Flexiformal Mathematics (in particular Proofs) - Methods, Resources, and Application (Invited Talk)

Abstract: In the last decades we have developed a paradigm of flexiformal modular representation of mathematics, where semantic annotations can be exploited for reader/learner support services that can be integrated into dynamic (HTML-based) presentations: active documents. We have tested active documents as a basis for AI-supported education and scientific communication on over 500-1000 students per semester over the last years.

This endeavor crucially depends on the understanding and processing of mathematical language. This talk will concentrate on two aspects.

I will present the OMDoc annotation ontology (with a special emphasis on flexiformal proofs), and its implementation in the sTeX (semantic LaTeX) package, as well as the sTeX corpus (ca. 10.000 pages of foundations and course materials in Math and CS). Particular focus will be on how that corpus can be used for the generation of linguistic resources for rigorous mathematical language. I will present on a collaborative project to generate a semantic lexicon (with formula notations) for mathematics (MathLex) and discuss how that can profit from the sTeX corpus.

In formal mathematics, the transport of theorems via theory (or record type) interpretations is well-established. I contend that in flexiformal mathematics, this is even more important: more objects, e.g. problems/solutions can be transported; and the interpretations are important learning objects in themselves. I will report on recent work to lift the transport (called relocalization in the flexiformal setting) to the level of natural language transformation and report on how this can be implemented using GF abstract syntax trees.

- Philippe de Groote, Inria Nancy - Grand Est, France

Some observations about plurals in mathematics (Invited Talk)

Abstract: In this talk, we will review issues related to the semantics of plurals in natural language and demonstrate how these issues arise in the case of mathematical texts. In particular, we will focus on the distinction between collective and distributive predicates. Then, we will sketch the lines of a compositional treatment of plurals in textual mathematics.